What is a Semantic Segmentation in AI/ML?

Semantic segmentation is a type of computer vision task that involves assigning each pixel in an image to a semantic class, such as "person," "car," "tree," or "building." This is in contrast to image classification, which assigns a single class label to the entire image.

Semantic segmentation assigns a semantic label to every pixel, effectively dividing the image into regions or segments that correspond to different objects or object parts. Unlike object detection, which provides bounding boxes around objects, semantic segmentation provides a pixel-level understanding of the image content.

Here are the key characteristics and concepts associated with semantic segmentation:

- Pixel-Level Classification: Semantic segmentation aims to classify each pixel in an image into one of several predefined classes or categories. Each pixel is assigned a label representing the object or region it belongs to.

- Object Recognition: Semantic segmentation allows the model to recognize and distinguish different objects and object parts within an image, even when they are partially occluded or overlapping.

- Contextual Understanding: It captures the context of each pixel within the image, as the classification decision is influenced by neighboring pixels. This context helps in distinguishing objects with similar colors or textures.

- Instance Segmentation vs. Semantic Segmentation: While semantic segmentation assigns a single label to each pixel based on the object it belongs to, instance segmentation goes a step further by distinguishing between different instances of the same object class. In instance segmentation, each instance of an object is assigned a unique label.

- Applications: Semantic segmentation is used in a wide range of applications, including autonomous driving (to identify road lanes, vehicles, pedestrians), medical image analysis (for organ segmentation), image and video editing, robotics (for scene understanding), and augmented reality (for object interaction).

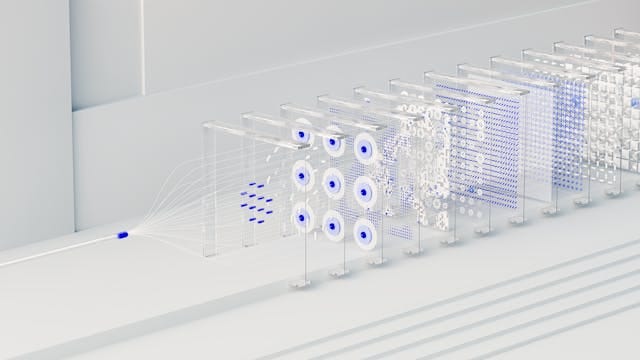

- Architecture: Convolutional Neural Networks (CNNs) are commonly used for semantic segmentation tasks. U-Net, FCN (Fully Convolutional Network), and DeepLab are popular architectures for semantic segmentation.

- Loss Function: Semantic segmentation models are typically trained using a loss function that measures the difference between predicted labels and ground truth labels at the pixel level. Common loss functions include cross-entropy loss and Dice loss.

- Post-Processing: After segmentation, post-processing techniques like connected component analysis and contour extraction may be used to refine the results and obtain more precise object boundaries.

- Data Annotation: Semantic segmentation datasets require pixel-level annotation, where each pixel in the training images is labeled with the corresponding object class. This is a labor-intensive task often done manually.

- Challenges: Semantic segmentation can be challenging in cases of object occlusion, object scale variation, and complex scenes with multiple overlapping objects.

Semantic segmentation has numerous practical applications in computer vision