What is a Recurrent Neural Network (RNN) in AI/ML?

A Recurrent Neural Network (RNN) is a type of artificial neural network designed for processing sequences of data. Unlike feedforward neural networks, where information flows in one direction from input to output, RNNs have connections that loop back on themselves, allowing them to maintain a hidden state or memory of previous inputs. This looping mechanism makes RNNs well-suited for tasks involving sequential data, time series data, natural language processing (NLP), and other applications where temporal dependencies are important.

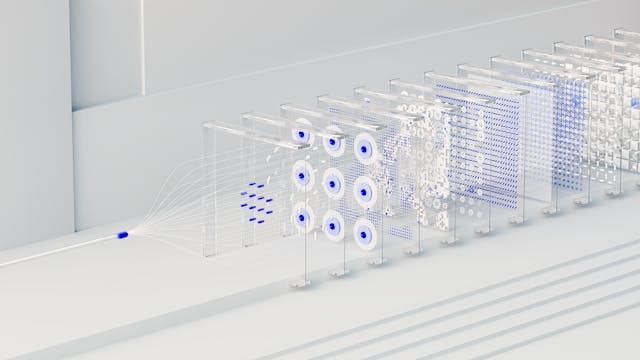

RNNs are made up of a series of interconnected layers, and each layer processes the input data and passes the output to the next layer. The output of each layer is also fed back to the same layer, which allows the RNN to learn long-term dependencies in the data.

RNNs are typically trained using a variety of different methods, such as gradient descent and backpropagation through time (BPTT). BPTT is a method for training RNNs that takes into account the long-term dependencies in the data.

Key characteristics and components of RNNs include:

- Recurrent Connections: RNNs are characterized by recurrent connections between neurons. These connections allow information to flow from the current step in the sequence to the next step and so on, enabling the network to capture temporal dependencies.

- Hidden State: RNNs maintain a hidden state vector that represents the network's internal memory or context. This hidden state is updated at each time step based on the input at that step and the previous hidden state.

- Time Unfolding: To understand how RNNs work, they are often "unfolded" over time, creating a series of interconnected layers, one for each time step. This unfolding helps visualize the flow of information through the network.

- Shared Parameters: RNNs typically share the same set of parameters (weights and biases) across all time steps. This parameter sharing ensures that the network can learn to recognize patterns and relationships consistently throughout the sequence.

- Vanishing Gradient Problem: RNNs are susceptible to the vanishing gradient problem, where gradients become very small during training, making it difficult to learn long-term dependencies. This limitation has led to the development of more advanced RNN variants.

What are the most successful and practical applications of Recurrent Neural Networks (RNN) in AI/ML?

Here are some of the most successful and practical applications of RNNs in AI and ML:

- Machine translation: RNNs are widely used for machine translation tasks, such as translating text from one language to another. For example, RNNs are used in Google Translate to translate text between over 100 languages.

- Text summarization: RNNs are used to summarize long texts into shorter texts. For example, RNNs are used in news apps to generate summaries of news articles.

- Speech recognition: RNNs are used to transcribe audio to text. For example, RNNs are used in voice assistants such as Siri and Alexa to transcribe spoken commands.

- Natural language processing (NLP): RNNs are used for a variety of NLP tasks, such as sentiment analysis, question answering, and text generation. For example, RNNs are used in social media apps to identify hate speech and in customer service chatbots to generate responses to customer inquiries. Sequence Modeling: RNNs are used for sequence modeling tasks, such as predicting the next element in a sequence, generating text, and time series forecasting.