What are Autoencoders in AI/ML?

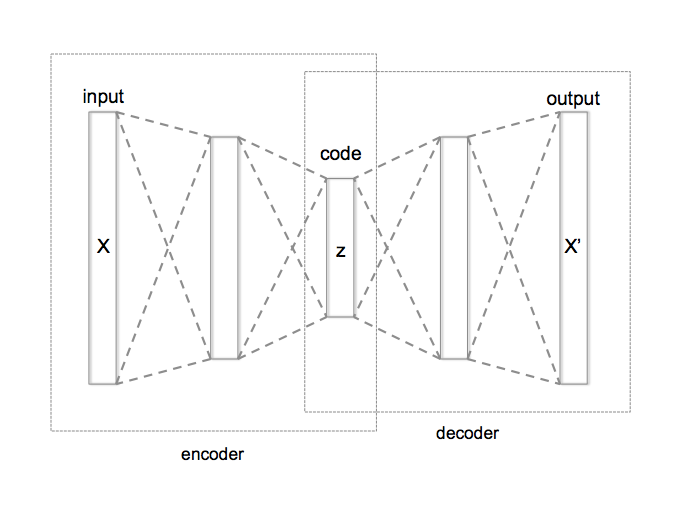

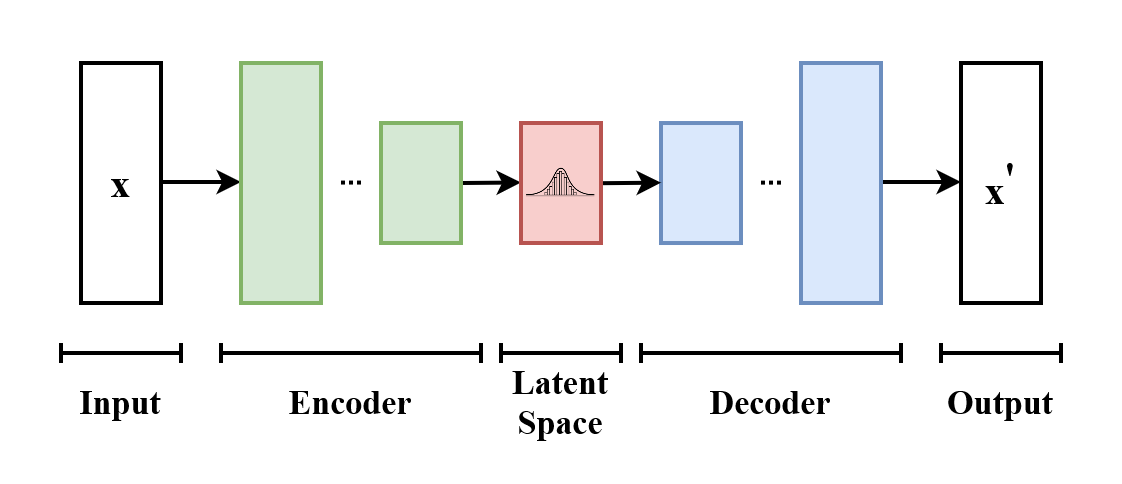

Autoencoders are a class of artificial neural networks used in artificial intelligence (AI) and machine learning (ML) for various purposes, including dimensionality reduction, feature learning, data compression, and generative modeling. They belong to the family of unsupervised learning algorithms and are designed to encode input data into a lower-dimensional representation and then decode it to reconstruct the original input as closely as possible. Autoencoders consist of an encoder and a decoder, and they are commonly used for tasks like data denoising, anomaly detection, and generating new data samples.

Key components and concepts of autoencoders include:

- Encoder: The encoder is the first part of the autoencoder, and its purpose is to transform the input data into a compressed or latent representation. This is typically achieved through a series of layers, such as fully connected (dense) layers or convolutional layers, that reduce the dimensionality of the input data.

- Latent Space: The latent space is the lower-dimensional representation of the input data learned by the encoder. It captures the essential features and patterns in the data while discarding unnecessary details.

- Decoder: The decoder is the second part of the autoencoder, and it reconstructs the input data from the latent representation. It performs the reverse operation of the encoder by expanding the compressed data back to the original data dimensionality.

- Reconstruction Loss: To train an autoencoder, a reconstruction loss function is used to measure the difference between the input data and the reconstructed data. The goal of training is to minimize this loss, which encourages the autoencoder to learn a useful representation in the latent space.

Autoencoders can take different forms and have various architectures, including:

- Vanilla Autoencoders: These consist of fully connected layers and are used for basic dimensionality reduction and reconstruction tasks.

- Variational Autoencoders (VAEs): VAEs are a type of autoencoder that is designed to generate data samples that resemble the training data distribution. They introduce probabilistic elements into the encoding and decoding processes, making them suitable for generative modeling.

- Sparse Autoencoders: These autoencoders are regularized to produce sparse representations in the latent space, which can be useful for feature learning and anomaly detection.

- Denoising Autoencoders: Denoising autoencoders are trained to remove noise from input data during reconstruction, making them suitable for noise reduction tasks.

- Convolutional Autoencoders: These are designed for handling image data and use convolutional layers in the encoder and decoder to capture spatial patterns.

Autoencoders can be used for a variety of tasks, including:

- Data compression: Autoencoders can be used to compress data without losing too much information. This can be useful for reducing the storage space required for data or for making it easier to transmit data over a network.

2. Anomaly detection: Autoencoders can be used to detect anomalies in data by identifying data points that are not well-reconstructed by the autoencoder. This can be useful for fraud detection, medical diagnosis, and other tasks where it is important to identify unusual data points.

3. Feature extraction: Autoencoders can be used to extract features from data. These features can then be used for other machine learning tasks, such as classification and regression.

4. Image generation: Autoencoders can be used to generate new images. This can be useful for tasks such as creating realistic synthetic images for training other AI models or for creating new art and design.